The Beginning

In a time, long, long, long ago, in 1995, there was a browser called Netscape.

Ah, old memories.

Ah, old memories.

The minds behind this browser had begun to realize that the simple static webpages of the internet were restrictive, and lacked the ability to respond dynamically to user interaction or input. Netscape wanted to respond to this need by generating reactive functionality within their browser, but they felt the contemporary language of the web, Java, was ill-suited to the task.

So they gave Brendan Eich, the inventor of Lightscript and clearly someone capable of working under pressure, 10 days to generate a programming language to perform this task. And lo and behold, he delivered!

Lightscript (the precursor to Javascript) was developed. Microsoft also developed it’s own language, reverse-engineered off of Lightscript, with significant differences to the Netscape version. These two versions of JS battled it out in something old people remember as the ‘browser wars’ (just kidding you guys). Eventually this was fixed when the ECMA standards specified a single version of the language and we all lived happily ever after.

But who cares? Well, Brendan probably cares. And you should too, because the capabilities that Javascript provided to the browser transformed the way we experience the Internet.

The Age of the Static Page

Imagine only being able to click on buttons, that simply took you to another page. Imagine inputing information in a search box, that when entered also took you to another page. If you wanted to further filter it, you had to reload the page. Every time you wanted to see different data, or have something respond on the page, you had to re-load the page or navigate to a new page.

Sounds real fun.

Obviously this sucked. Lightscript, and eventually Javascript was developed to address this issue. Eventually, a technique called Dynamic HTML was developed which allowed browsers to move things around the screen, and even change in response to user interaction. In addition to this, Microsoft developers had developed a technology called XMLHttpRequest in 2000 but after the web development bubble burst and web development in general slowed dramatically. Asynchronous server communications were around, but they were not particularly prominent.

“And some things that should not have been forgotten were lost.”

“And some things that should not have been forgotten were lost.”

However, in 2005, a developer named Jesse James Garrett wrote an essay describing a methodology his team utilized, where several technologies were used to mimic the responsiveness of desktop applications. He coined the combination of technologies that enabled this responsiveness AJAX.

AJAX is Born

AJAX stands for Asynchronous Javascript and XML. In Jesse’s own words:

“The Ajax engine allows the user’s interaction with the application to happen asynchronously — independent of communication with the server.”

Information can be displayed on a webpage, whilst data can still be being fetched or processed in the background. This meant that changes could be made to the page or part of the page, and the whole page would not have to be reloaded. This made pages more interactive and performant.

The term AJAX refers to the technologies which make this kind of webpage possible.

How specifically do these technologies function? Well, in order to communicate with another server via Javascript, you need a JS object to provide that functionality. The object we utilize is the aforementioned XMLHttpRequest. One of the most popular APIs for working with this object to communicate with serves is called the Fetch API. But! Before we talk about the Fetch API, we need to talk about Promises, the foundational modern JS methodology for asynchronous operations.

“I promise to return the result of this asynchronous operation, come hell or high water!”

“I promise to return the result of this asynchronous operation, come hell or high water!”

Promise Me This

Intro to Promises

A promise, I should remind you, is (and I stole this definition pretty much straight from MDN so don’t sue me):

- a JS returned object which represents the result of an asynchronous operation,

- to which you attach callbacks,

- which are then executed depending on what the the state of the promise is when it completes.

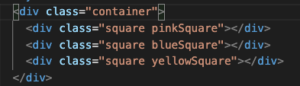

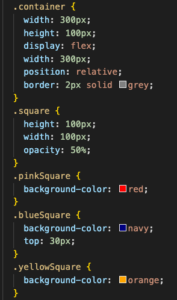

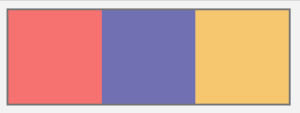

Check out this code:

getSomeData("urlWhereDataLives").then(doThisFunction);

Our function getSomeData is an asynchronous one. While that request to the url is pending, the function getSomeData returns a promise. The promise (which is a JS Promise object) has a then() method, which we invoke and pass a callback function. When the data we requested in getSomeData finally arrives, it is passed to the callback function we passed to then().

If you want to handle errors you can pass it a second function:

getSomeData("urlWhereDataLives").then(doThisFunction, oopsErrorHappened);

If there is an error, the second callback is invoked, oopsErrorHappened. However, this is not as common as using the catch() statement:

getSomeData("urlWhereDataLives").then(doThisFunction).catch(oopsErrorHappened);

This seems simple, but in the words of David Flanagan,

“Promises seem simple at first….But they can become surprisingly confusing for anything beyond the simplest use cases.” – Javascript: The Definitive Guide

Real talk, David. Real talk.

Promise Chaining

So getSomeData was an asynchronous function. What if we want to parse the data returned by it in another asynchronous operation? Enter Promise chaining:

getSomeData("urlWhereDataLives").then(parseThisData).then(doSomeFunction)

The function parseThisData initiates an asynchronous operation with the results of getSomeData. Then when parseThisData is complete, the results are passed to doSomeFunction. In this way, the results of one asynchronous operation are passed to another by using the function then() attached to the new Promise objects created when the asynchronous functions are invoked.

Did that make sense? I hope that made sense because this train is rolling on! Choo choo!

Promise Terminology

There are some very specific terms for talking about Promises.

A promise can have 3 states:

- pending – the Promise has no value, it’s chilling

- fulfilled – Sucsess! Some kind of result value has been assigned to the promise

- rejected – no result could be assigned.

When the Promise leaves it’s pending state, it is resolved*.

- resolved – the Promise is either fulfilled or rejected

*According to the Stack Overflow answer which can be found in my references, this is not *technically* accurate, but for the sake of working with Promises, I will call it good enough.

Now lets talk about a common API for handling async operations!

Go Fetch!

The Fetch API is a lovely interface that saves us from the scary oogey-boogey man that is the XMLHttpRequest API, which looks like this:

function makeRequest() {

httpRequest = new XMLHttpRequest();

if (!httpRequest) {

alert('Giving up :( Cannot create an XMLHTTP instance');

return false;

}

httpRequest.onreadystatechange = alertContents;

httpRequest.open('GET', 'test.html');

httpRequest.send();

}

Ugh. Even David Flanagan calls it “old and awkward”.

XMLHttpRequest: “Hey, you better watch the way you talk about me!”

XMLHttpRequest: “Hey, you better watch the way you talk about me!”

Fetch has a nice, happy 3 step process for making HTTP requests. I will quote my favorite JS book, “Javascript: The Definitive Guide” to summarize them:

- Call fetch(), passing the URL whose content you want to retrieve.

- Get the response object that is asynchronously returned by step 1 when the HTTP response begins to arrive, and call a method of this response object to ask for the body of the response.

- Get the body object that is asynchronously returned by step 2 and process it however you want.

And that’s it! Nothing bad ever happens and that’s all you ever need to think about! Yay!

Thank Goodness! All my problems are solved! This must be Heaven!

Thank Goodness! All my problems are solved! This must be Heaven!

Just kidding. It’s usually not that simple. Let’s dive in, shall we?

It’s also important to note that the Fetch API is Promise-based, and there are two asynchronous steps.

-

Call fetch(), passing the URL whose content you want to retrieve.

Give me your basic authorization credentials in your HTTP request header NOW!

Give me your basic authorization credentials in your HTTP request header NOW!

Calling fetch initiates an asynchronous operation. The Promise returned by fetch() resolves to a Response object.

Huh?

Well, this sentence just means exactly what it says: when the Promise is resolved, it is now a Response object, thanks to the magic of the Fetch API. We like the Response object, because it offers us all kinds of fun stuff, including two very important methods for parsing data we get from HTTP requests: json(), and text(). More on that later though.

Very often the API that you are requesting stuff from needs you to request it in a specific way. You may need to include authorization credentials, or…other stuff.

So when you call fetch, you might need to provide two arguments: one being the API url, the other being the necessitated header information.

//make new Headers object

let headers = new Headers();

//set the header

headers.set("Authorization", 'Basic ${btoa('${myUsername}:${password}')}');

//include it in fetch request

fetch("myAPI/whereIGetStuff", { headers: header }).then(doSomeStuff)

2.Get the response object that is asynchronously returned by step 1…and call a method of this response object…

Sweet! I got my data from the API in my cool Response object! Now what?

Most commonly the response from a server is a JSON object. So we might want to parse it as a JSON object like in the following code, calling the json() method of the Response object…

fetch("myAPI/whereIGetStuff.com")

.then(response => response.json())

.then(output => console.log(output))

Or we might want to check the status:

fetch("myAPI/whereIGetStuff.com")

.then(response => console.log(response.status))

3.Get the body object that is asynchronously returned by step 2 and process it however you want.

When we call fetch() to get data from some browser, the second step, the parsing of the Response into JSON or text, is itself an asynchronous operation.

So another Promise object is created, which we then pass another callback function to invoke when this second Promise is resolved:

fetch("myAPI/whereIGetStuff.com")

.then(response => response.json())

.then(myData => console.log(myData))

This callback function is where we finally get to handle our data!

Goodbye Yellow Brick Road

Now, we have come so far!

You were introduced to the history, and therefore necessity of asynchronous code, along with its utility in promoting interactivity in webpages.

You were introduced to the fundamental technology of asynchronous code: the Promise.

And finally you were introduced to one of the most popular and useful API’s for working asynchronously: the Fetch API.

Now forth, and happy asynchronous development!

References:

Marcos Sandrini, JavaScript: its history, and some of its quirks, Nov 3 2021, https://msandrini.medium.com/javascript-its-history-and-some-of-its-quirks-6d5da5e8a47

Aaron Swartz, A Brief History of Ajax, Dec 22 2005, http://www.aaronsw.com/weblog/ajaxhistory

Jesse James Garrett, Feb 18th 2005, Ajax: A New Approach to Web Applications, https://courses.cs.washington.edu/courses/cse490h/07sp/readings/ajax_adaptive_path.pdf

Using Promises – JavaScript | MDN. Developer.mozilla.org. https://developer.mozilla.org/en-US/docs/Web/JavaScript/Guide/Using_promises. Published 2021. Accessed December 16, 2021.

Promise – JavaScript | MDN. Developer.mozilla.org. https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/Promise. Published 2021. Accessed December 16, 2021.

Fetch API – Web APIs | MDN. Developer.mozilla.org. https://developer.mozilla.org/en-US/docs/Web/API/Fetch_API. Published 2021. Accessed December 17, 2021.

Flanagan D. Javascript: The Definitive Guide. 7th ed. Sebastopol: O’Reilly; 2020.

What is the correct terminology for javascript promises. Stack Overflow. https://stackoverflow.com/questions/29268569/what-is-the-correct-terminology-for-javascript-promises. Published 2015. Accessed December 17, 2021.

Ah, old memories.

Ah, old memories.

“And some things that should not have been forgotten were lost.”

“And some things that should not have been forgotten were lost.” “I promise to return the result of this asynchronous operation, come hell or high water!”

“I promise to return the result of this asynchronous operation, come hell or high water!” XMLHttpRequest: “Hey, you better watch the way you talk about me!”

XMLHttpRequest: “Hey, you better watch the way you talk about me!” Thank Goodness! All my problems are solved! This must be Heaven!

Thank Goodness! All my problems are solved! This must be Heaven! Give me your basic authorization credentials in your HTTP request header NOW!

Give me your basic authorization credentials in your HTTP request header NOW! “Theres no place like the render tree, Toto!”

“Theres no place like the render tree, Toto!” A byte which evaluates to the characters ’77’

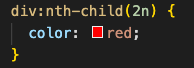

A byte which evaluates to the characters ’77’ Looks like there’s CSSomething cool going on here with this tree

Looks like there’s CSSomething cool going on here with this tree Its sort of like assembling the scaffolding for a house…

Its sort of like assembling the scaffolding for a house…